The artificial intelligence revolution is accelerating at breakneck speed, but there’s a critical problem: traditional cybersecurity frameworks weren’t designed for AI’s unique risks. While organizations rush to deploy AI systems—from ChatGPT-powered customer service bots to predictive analytics engines—they’re often flying blind when it comes to security.

That’s about to change. The National Institute of Standards and Technology (NIST) has unveiled a groundbreaking initiative to develop specialized security control overlays for AI systems, building on their battle-tested SP 800-53 cybersecurity framework.

The AI Security Gap: Why Traditional Cybersecurity Falls Short

Imagine trying to secure a self-driving car using the same security protocols you’d use for a basic calculator. That’s essentially what many organizations are doing with AI systems today. Traditional cybersecurity frameworks focus on protecting data at rest, in transit, and during processing. But I introduces entirely new attack vectors.

Consider these AI-specific threats that traditional security frameworks struggle to address:

- Prompt injection attacks where malicious users manipulate AI responses through clever input crafting

- Model poisoning where attackers corrupt training data to influence AI behavior

- Adversarial examples that trick AI systems into making wrong decisions

- Data reconstruction attacks where sensitive training data is extracted from deployed models

These aren’t theoretical concerns. In 2023 alone, we’ve seen real-world incidents where AI chatbots were manipulated to reveal sensitive information, recommendation algorithms were gamed to spread misinformation, and image recognition systems were fooled by strategically placed stickers.

Enter NIST’s SP 800-53 AI Security Overlays

NIST’s proposed solution is elegantly simple yet powerful: create specialized “overlays” that adapt the proven SP 800-53 cybersecurity framework specifically for AI systems. Think of overlays as customizable security templates—like having different playbooks for securing a bank vault versus a research laboratory.

The SP 800-53 framework has been the gold standard for federal cybersecurity for years, with over 1,000 security controls covering everything from access management to incident response. Rather than reinventing the wheel, NIST is smartly adapting these battle-tested controls for AI’s unique challenges.

Why This Approach Works

Familiarity breeds adoption: Organizations already using SP 800-53 can seamlessly integrate AI security without learning entirely new frameworks.

Flexibility without chaos: Instead of one-size-fits-all security, overlays let organizations pick and choose controls based on their specific AI use cases.

Proven foundation: Building on SP 800-53’s mature control structure ensures comprehensive coverage without starting from scratch.

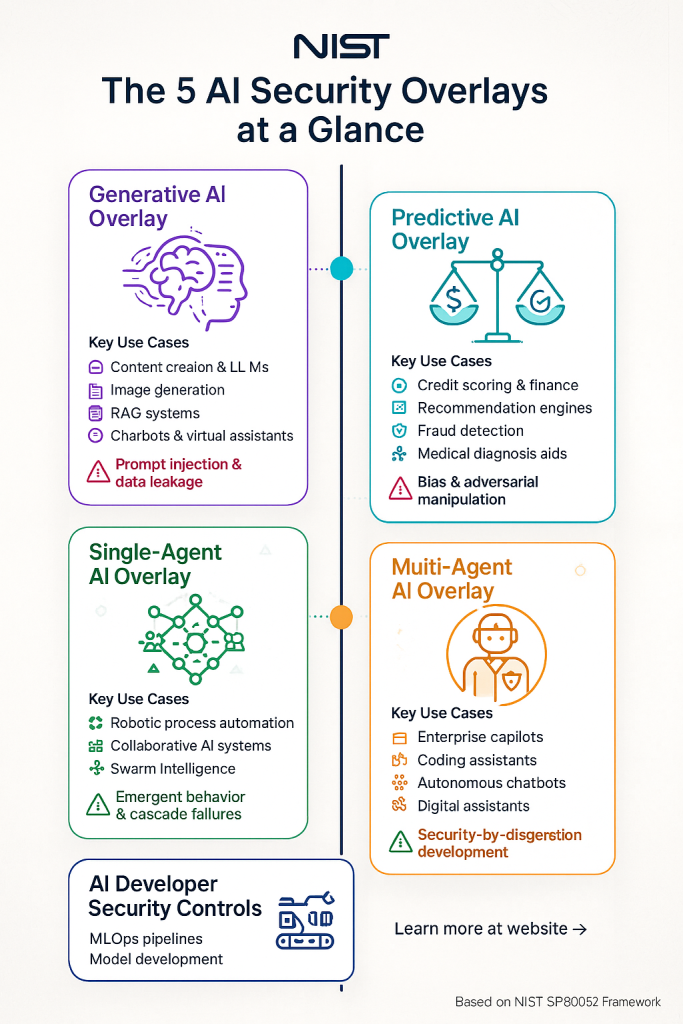

The Five AI Security Overlays: Your Complete Defense Arsenal

NIST has identified five distinct AI use cases, each requiring its own specialized security approach. Let’s explore how each overlay addresses specific threats and protections.

1. Generative AI Overlay: Taming the Creative Beast

What it covers: Large Language Models (LLMs), image generators, content creation systems, and Retrieval-Augmented Generation (RAG) applications.

Generative AI is like having a brilliant but unpredictable employee who can create amazing content but might occasionally share company secrets or generate inappropriate material. This overlay focuses on controlling what goes in and what comes out.

Key security measures include:

- Input sanitization controls that screen for malicious prompts attempting to manipulate the AI

- Output monitoring systems that flag potentially harmful or sensitive content before it reaches users

- Content filtering guardrails that prevent generation of inappropriate, biased, or dangerous material

- Audit logging that tracks every interaction for compliance and incident investigation

Real-world example: A law firm using an AI writing assistant needs controls to prevent the system from accidentally including confidential client information in generated documents, while also blocking attempts to make the AI generate legal advice outside its expertise.

2. Predictive AI Overlay: Ensuring Fair and Accurate Decisions

What it covers: Recommendation engines, credit scoring systems, fraud detection, medical diagnosis aids, and any AI making consequential decisions about people.

Predictive AI systems are like digital judges—they make decisions that can significantly impact lives and businesses. This overlay ensures these decisions are not only accurate but also fair and explainable.

Critical protections include:

- Bias detection and mitigation controls that monitor for discriminatory patterns in AI decisions

- Model robustness testing against adversarial attacks designed to manipulate predictions

- Explainability requirements ensuring stakeholders can understand how decisions are made

- Data lineage tracking that maintains clear records of training data sources and quality

Real-world example: A bank’s AI loan approval system needs protections against both adversarial attacks (someone gaming the system to get approved inappropriately) and discriminatory bias (ensuring the system doesn’t unfairly deny loans based on protected characteristics).

3. Single-Agent AI Overlay: Securing Your Digital Assistant

What it covers: Enterprise copilots, coding assistants, autonomous chatbots, and any AI system acting independently on behalf of users.

Single-agent AI systems are like giving an intern access to your entire digital workspace. They need careful supervision and clear boundaries to prevent overreach or misuse.

Essential security controls include:

- Permission boundaries that limit what actions the AI can take and what data it can access

- Human oversight mechanisms requiring approval for high-stakes decisions or actions

- Session management controlling how long the AI can operate autonomously

- Plugin security ensuring third-party integrations don’t introduce vulnerabilities

Real-world example: A code generation assistant in a software development environment needs controls to prevent it from accessing sensitive repositories while still being helpful for routine coding tasks.

4. Multi-Agent AI Overlay: Orchestrating the AI Symphony

What it covers: Robotic process automation with multiple bots, collaborative AI systems, swarm intelligence applications, and any scenario where multiple AI agents interact.

Multi-agent AI systems are like managing a team of specialists who need to coordinate without stepping on each other’s toes—or being manipulated into working against the organization’s interests.

Coordination security measures include:

- Inter-agent communication protocols that ensure secure and authenticated message passing

- Behavioral monitoring to detect emergent behaviors that weren’t programmed

- Isolation mechanisms that contain the impact if one agent becomes compromised

- Governance frameworks defining roles, responsibilities, and escalation procedures

Real-world example: A supply chain management system using multiple AI agents to handle inventory, ordering, and logistics needs protections against coordinated attacks where compromising one agent could cascade through the entire system.

5. AI Developer Security Controls Overlay: Building Security from the Ground Up

What it covers: Model development teams, data scientists, MLOps pipelines, and the entire AI system development lifecycle.

This overlay is like having security-conscious architects design the building rather than trying to retrofit security afterward. It embeds protection into every stage of AI development.

Development security encompasses:

- Secure training environments with proper access controls and data protection

- Supply chain security for open-source models, datasets, and development tools

- Version control and provenance tracking ensuring you know exactly what’s in your AI system

- Threat modeling specifically designed for AI attack vectors

Real-world example: A pharmaceutical company developing AI for drug discovery needs controls to protect proprietary research data during model training while ensuring the final AI system can’t be reverse-engineered to reveal trade secrets.

Integration with NIST’s Broader AI Security Ecosystem

These overlays don’t exist in isolation—they’re part of NIST’s comprehensive approach to AI governance and security. They integrate seamlessly with:

AI Risk Management Framework (AI RMF): Provides the strategic risk assessment foundation that guides which overlays and controls to implement.

SP 800-218A: Offers secure development practices specifically for generative and dual-use AI models.

AI 100-2e2025: Delivers a detailed taxonomy of adversarial attacks and their countermeasures.

Draft AI 800-1: Addresses the unique challenges of managing misuse risks in dual-use foundation models.

Think of it as a complete security ecosystem where each component reinforces the others, creating multiple layers of protection.

What This Means for Your Organization

Immediate Actions You Can Take

- Assess your current AI inventory: Catalog all AI systems in your organization and classify them according to NIST’s five use cases.

- Evaluate existing security gaps: Compare your current AI security measures against the overlay frameworks (even in their preliminary form).

- Engage with the development process: NIST is actively seeking feedback—join their Slack channel or submit comments to influence the final standards.

- Start planning for compliance: Begin conversations with your security and compliance teams about how these overlays might impact your governance frameworks.

Timeline and Implementation

The first public draft overlay is expected in early fiscal year 2026, followed by workshops and extensive stakeholder engagement. This gives organizations roughly 18 months to prepare for implementation.

Early adopters who engage with the development process will have significant advantages in understanding and implementing the final standards. They’ll also have opportunities to influence the frameworks to better suit real-world operational needs.

The Competitive Advantage of Early Adoption

Organizations that proactively implement AI security overlays will gain several strategic advantages:

Customer trust: Demonstrating robust AI security practices builds confidence with clients and partners.

Regulatory readiness: As AI regulations evolve globally, having NIST-aligned security frameworks positions organizations ahead of compliance requirements.

Risk mitigation: Preventing AI security incidents is far less costly than responding to breaches or compliance failures.

Operational efficiency: Well-secured AI systems operate more reliably and require less manual oversight.

Looking Ahead: The Future of AI Security

NIST’s AI security overlays represent a crucial step toward mature AI governance, but they’re just the beginning. As AI technology continues evolving—with advances in multimodal AI, autonomous systems, and artificial general intelligence—these frameworks will need continuous updates and refinements.

Organizations that build security-conscious AI practices now will be better positioned to adapt to future developments and regulatory requirements. They’ll also contribute to the broader ecosystem of responsible AI development.

The question isn’t whether AI security frameworks will become mandatory—it’s whether your organization will be ready when they do.

Key Takeaways

- NIST’s AI security overlays adapt proven cybersecurity controls for AI-specific risks

- Five specialized overlays address different AI use cases, from generative AI to multi-agent systems

- Early engagement with the development process offers strategic advantages

- Implementation timeline begins with public drafts in early 2026

- Proactive adoption builds competitive advantages in trust, compliance, and operational efficiency

The AI revolution is unstoppable, but it doesn’t have to be ungovernable. With NIST’s structured approach to AI security, organizations can harness AI’s transformative power while maintaining the trust and protection their stakeholders demand.

Want to stay ahead of AI developments? Subscribe to my newsletter and join the conversation in the comments below. How is your organization preparing for AI security challenges?