TL;DR – The Stakes Hidden in Plain Sigh

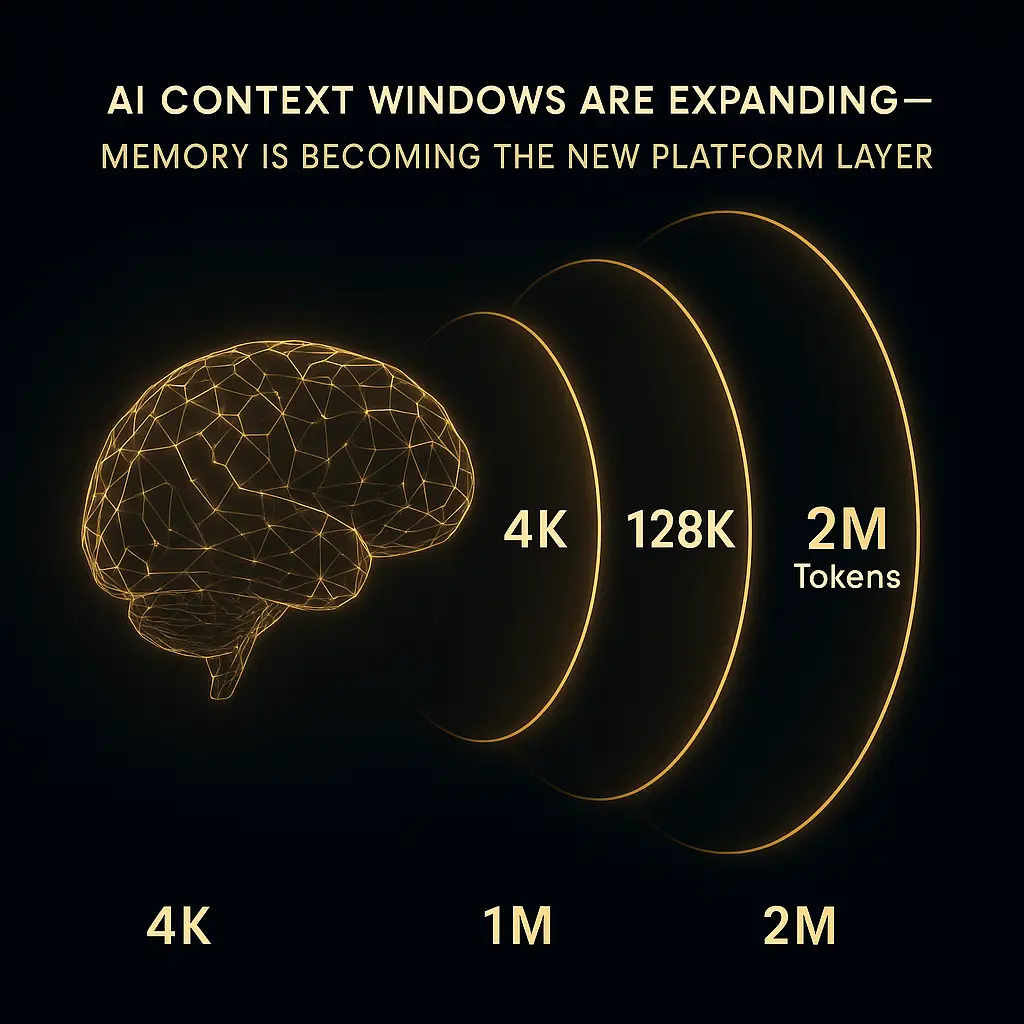

Just as RAM defined computing capability and browser market share determined internet control, context window size is becoming the defining constraint of AI utility. From Claude’s 200K tokens to Gemini’s 2M window, we’re watching a new Moore’s Law unfold—but this time, the implications are about relationship depth, not processing speed.

The race to expand AI context windows isn’t just a technical competition. It’s a fundamental battle for who controls the persistence layer of human-AI interaction, with implications as profound as the browser wars of the 1990s.

This isn’t a specs race. It’s a platform war where the prize is nothing less than becoming the persistent layer of human knowledge work.

Think about it: what made Microsoft Office unstoppable wasn’t features—it was file formats. What made Facebook dominant wasn’t the newsfeed—it was the social graph. And what will determine the winner in AI isn’t who has the smartest model—it’s who controls the memory layer of how humans and AI work together.

The context window wars are the new platform wars. And most people don’t even know they’re happening.

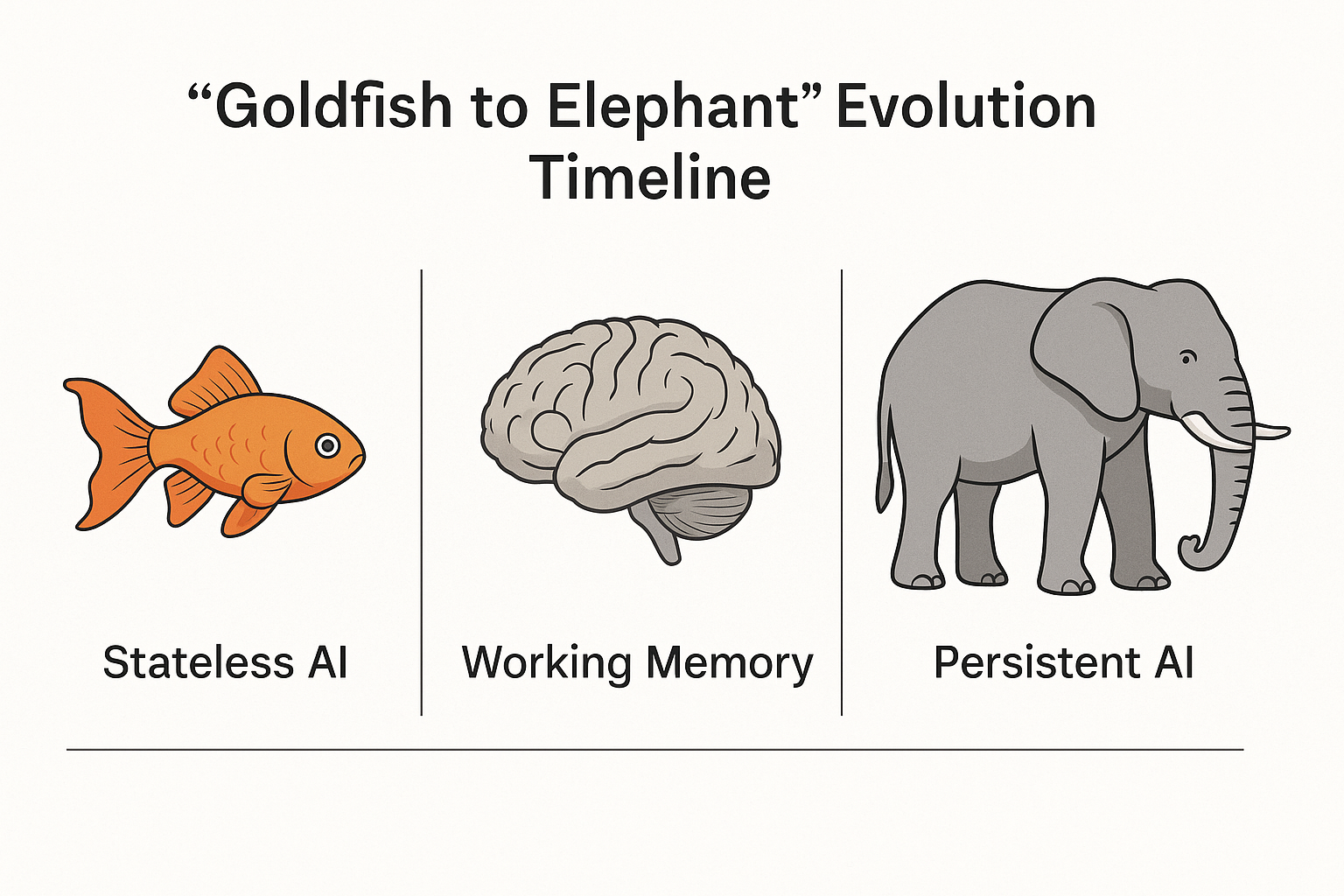

From Goldfish to Elephants: The Evolution of AI Memory

To understand why context windows matter, we need to first understand how we got here. The journey from stateless to stateful AI isn’t just a technical evolution—it’s a fundamental shift in what these systems can be.

Era 1: The Goldfish Phase (2020-2023) Early language models were brilliant goldfish. Every conversation started from zero. You could have a profound discussion about quantum mechanics, and thirty seconds later, the AI would have no idea who you were or what you’d discussed. This wasn’t just a limitation—it shaped how we used these tools. They were good for one-shot tasks: “Write me an email,” “Explain this concept,” “Generate some code.”

The constraint was brutal: 4,000 tokens for GPT-3, 8,000 for early GPT-4. That’s about 3,000 words—less than this essay. Try collaborating on anything substantial and you’d hit the wall fast. Users developed workarounds: summary chains, careful prompt management, external memory stores. We accepted this limitation the way we once accepted dial-up speeds.

Era 2: The Working Memory Phase (2023-2024) Then came the explosion. Claude pushed to 100K tokens, then 200K. GPT-4 Turbo hit 128K. Gemini Pro reached 1M. Suddenly, you could paste entire codebases, full research papers, complete book manuscripts. The goldfish grew a working memory.

But this created a new problem: context without continuity. You could have an incredibly deep session analyzing your company’s entire codebase, but start a new conversation and—poof—all that shared understanding vanished. It was like having a brilliant colleague with perfect recall during meetings but complete amnesia between them.

Era 3: The Persistence Layer (2024-Present) Now we’re watching the third phase emerge: persistent, cross-session memory. ChatGPT’s Memory feature, Claude’s Projects, Google’s cross-product Gemini integration. These aren’t just features. They’re attempts to own the persistence layer of human-AI collaboration.

This is where things get interesting. And dangerous. And potentially game-changing.

The Context Window as Real Estate

Here’s the insight most people miss: context windows aren’t just about how much information an AI can process. They’re about how much relationship equity you can build with a system.

Think of context like real estate in a city. In the 4K token era, you were camping. No permanence, no investment, no accumulated value. In the 100K era, you could rent an apartment—enough space for real work, but still transient. In the 2M token era with persistence? You’re building equity. Every interaction adds value to your “property.”

This real estate has unique characteristics:

Compound Value: Unlike physical space, context compounds. Every document you share, every conversation you have, every preference expressed makes future interactions more valuable. It’s like a house that gets bigger and more valuable every time you use it.

Switching Costs: When your AI knows your entire project history, coding style, writing voice, and work patterns, switching to another system isn’t just inconvenient—it’s expensive. You lose all that accumulated context. It’s the ultimate lock-in.

Network Effects: As more of your work lives in one AI’s context, it becomes the natural place for more work. Why start from scratch explaining your project to Claude when Gemini already knows everything? The rich get richer.

Depreciation Risk: But here’s the dark side—context can depreciate. If you stop using a system, that accumulated understanding becomes stale. Your AI’s model of your work diverges from reality. The relationship atrophies.

The New Data Moats

Traditional tech moats came from data collection. Google knew what you searched for. Facebook knew who your friends were. Amazon knew what you bought. But AI context windows create a fundamentally different kind of moat: earned understanding.

This isn’t data that can be scraped, bought, or stolen. It’s understanding that can only be built through interaction. When an AI knows not just what you’re working on, but how you think about problems, how you like information presented, what context you need for decisions—that’s not data. That’s relationship.

Consider the switching costs:

Traditional Software: Export your files, learn new interface. Annoying but doable.

Traditional Web Platforms: Lose your history, rebuild your graph. Painful but possible.

AI with Deep Context: Lose hundreds of hours of accumulated understanding. It’s not just data portability—it’s relationship portability. And relationships can’t be exported as JSON.

This creates a moat that’s both deeper and more personal than anything we’ve seen. It’s not just about network effects or data advantages—it’s about the AI becoming a bespoke tool shaped by every interaction you’ve had with it.

The Architecture of Memory

Understanding how different platforms approach memory reveals their strategic bets:

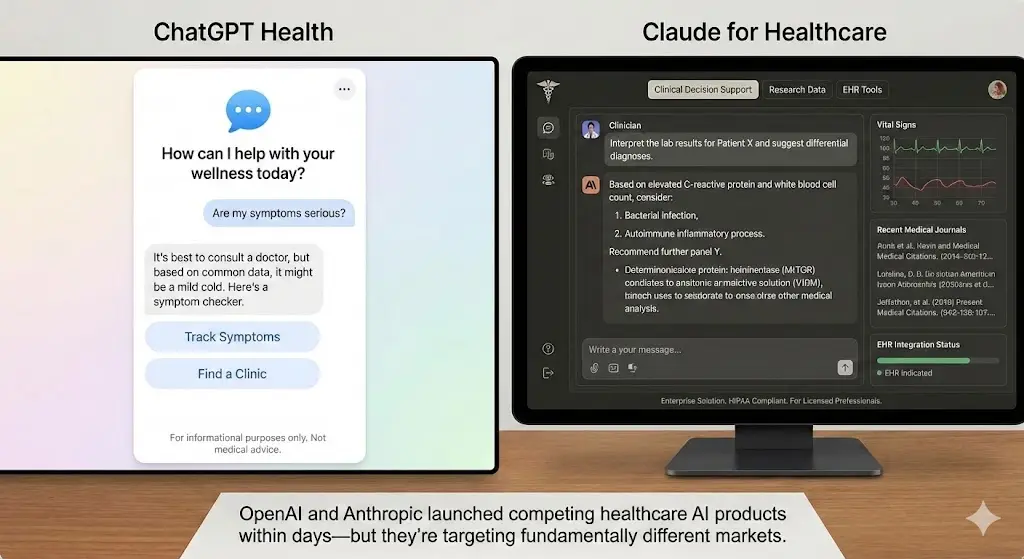

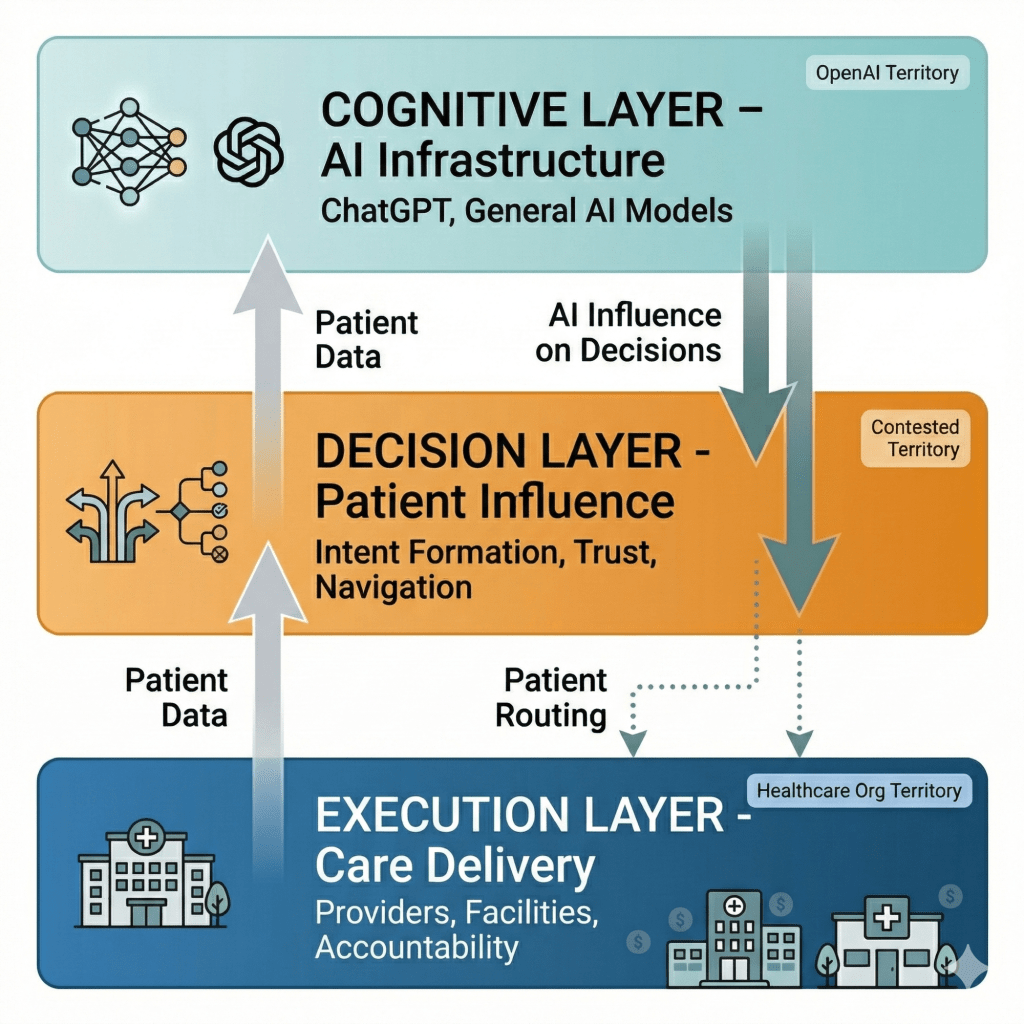

OpenAI’s Approach: Explicit Memory ChatGPT’s Memory feature is fascinatingly transparent. It tells you what it remembers, lets you view and delete memories, and makes the persistence layer visible. This is memory as a feature—controllable, manageable, but also limited.

The strategy is classic OpenAI: ship fast, iterate in public, let usage patterns emerge. But the implementation reveals constraints. Memories are key-value pairs, not rich context. It’s more like a contact database than a working relationship.

Anthropic’s Approach: Constitutional Context Claude’s Projects feature takes a different path. Instead of remembering across all conversations, it creates persistent workspaces. You build context within a project, and that context persists across sessions within that boundary.

This is memory with boundaries—more structured, more intentional, potentially more powerful for deep work but less fluid for general use. It’s betting that users want memory with clear boundaries, not an omniscient assistant.

Google’s Approach: The Ambient Platform Gemini’s 2 million token window isn’t just about size—it’s about Google’s platform ambitions. With Gemini integrated across Gmail, Docs, Drive, and Calendar, Google isn’t building memory—it’s building an ambient AI layer that already has access to your digital life.

Why remember when you can just access? This is the most ambitious play: make memory irrelevant by making context ubiquitous. Your AI doesn’t need to remember your projects when it can see all your files. It doesn’t need to learn your schedule when it has your calendar.

Microsoft’s Approach: The Enterprise Memory Despite Copilot’s current limitations (it’s pretty terrible today with embarrassingly small context windows), Microsoft’s strategy is clear: own the enterprise memory layer through Office integration. They’re betting that enterprise users will accept weaker AI if it’s deeply integrated with their existing tools and data.

Never underestimate the power of Microsoft’s enterprise entrenchment. In the consumer market, better AI wins. But in the enterprise, integration and compliance often matter more than capability. Still, with Copilot’s current context limitations, they’re currenty bringing a knife to a gunfight.

The Platform Wars Playbook

We’ve seen this movie before. In the 1990s, browsers were just viewers for HTML. Then they became platforms. The winner—Google’s Chrome—wasn’t the best at viewing websites. It was the best at being extended, integrated, and embedded.

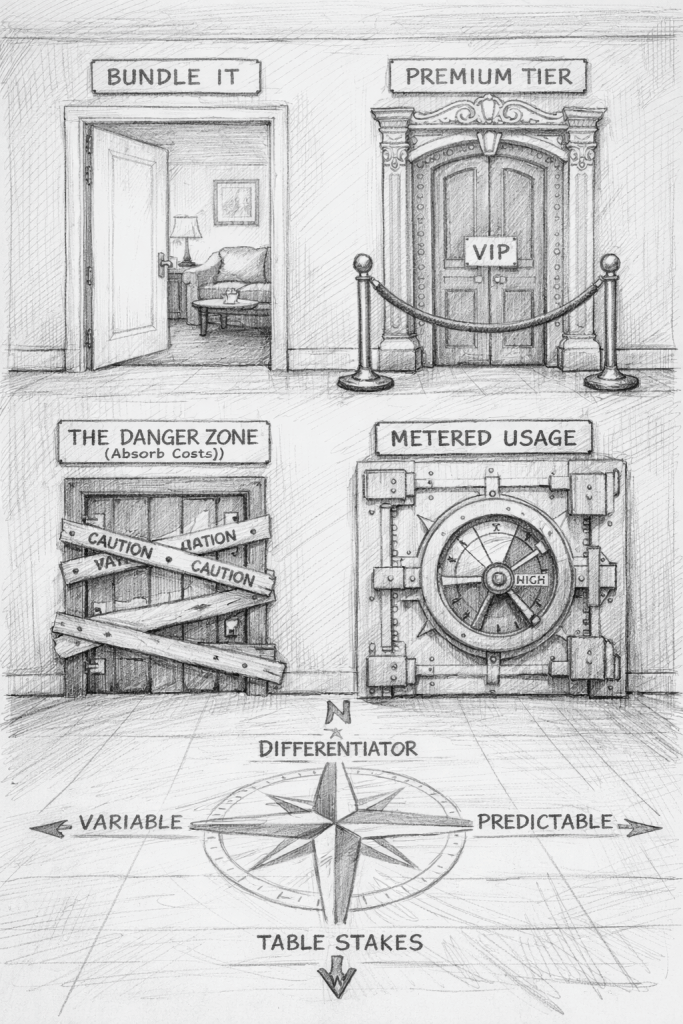

The same playbook is unfolding with AI context windows:

Step 1: Expand the Territory First, make the context window so large that competitors look cramped. Google’s 2M tokens makes everyone else look stingy. It’s the equivalent of Gmail offering 1GB when everyone else offered 10MB.

Step 2: Create Lock-in Through Value Then, make switching painful—not through tricks but through genuine accumulated value. Every conversation, every project, every preference expressed increases the switching cost.

Step 3: Extend the Platform Finally, open the platform to developers. Let them build on your memory layer. Create an ecosystem where third-party apps can leverage your context understanding. Make your memory layer the default for new AI applications.

We’re currently between Steps 1 and 2. The leaders are expanding context windows and building persistence features. But Step 3—the platform play—is where the real battle will be fought.

The Implications Nobody’s Talking About

The End of File Systems When AI can hold millions of words of context and remember across sessions, why do we need files? The file system is a human workaround for limited memory. In an AI-native world, work becomes a continuous stream of context, not discrete documents.

The New Digital Divide As context windows expand, we’re creating a new inequality: between those who can afford large-context AI and those who can’t. It’s not just about access to AI—it’s about access to AI that remembers, understands, and grows with you.

The Memory Sovereignty Problem When an AI knows everything about your work, who owns that understanding? Can you export it? Delete it? Prevent it from being used to train future models? We’re creating the most intimate digital relationships in history with no framework for governing them.

The Collaboration Paradox As individual AI relationships deepen, sharing becomes harder. How do you collaborate when your AI has thousands of hours of context your colleague’s doesn’t? We might be building better solo work tools at the expense of teamwork.

The Road Ahead: Three Scenarios

Scenario 1: The Platform Consolidation One player— Google? OpenAI? Anthropic?—achieves decisive victory by combining superior context windows, persistence, and platform integration. AI memory becomes like email—theoretically open but practically dominated by one provider. Innovation slows but reliability improves.

Scenario 2: The Protocol Peace Pressure from users and regulators forces interoperability. We get standard formats for AI context export/import. Memory becomes portable, competition thrives on features rather than lock-in. This is the best outcome for users but least likely given platform incentives.

Scenario 3: The Specialization Spread Different platforms win different use cases. Anthropic owns professional writing and analysis. Google owns general productivity. Specialized players own verticals like coding, design, and research. Context becomes fragmented across platforms, creating new integration challenges.

Conclusion: The Context is the Product

We’re watching the birth of a new platform layer. Not an operating system for computers, but an operating system for thought. The winners won’t just be the ones with the biggest context windows—they’ll be the ones who understand that context is relationship, and relationship is everything.

The file was the atomic unit of the PC era. The page was the atomic unit of the web era. Context is becoming the atomic unit of the AI era. And whoever controls the context layer controls the future of knowledge work.

The memory wars are here. And most people don’t even know they’re fighting with goldfish while others are building elephants.

The question isn’t whether you’ll need AI with memory. It’s whether you’ll own that memory—or whether it will own you.

Choose your platform carefully. The switching costs are about to become astronomical.