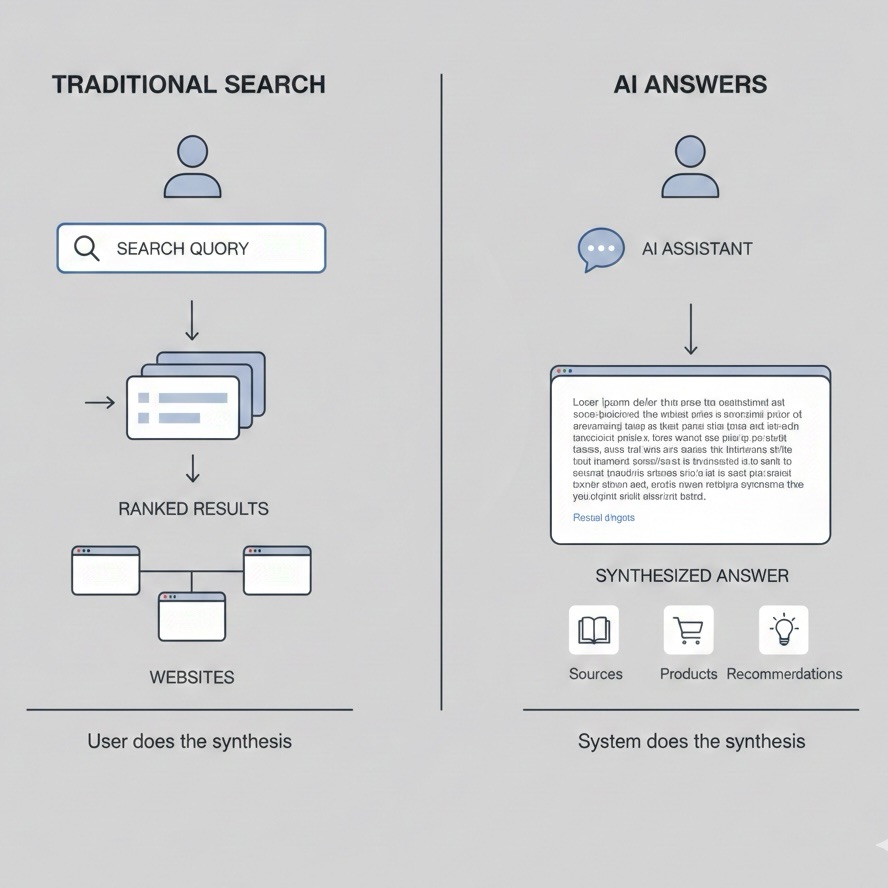

Your customers have stopped “searching” for products. They ask AI assistants, receive synthesized answers with shortlists and recommendations, then make decisions—often without ever visiting your website.

This shift is measurable. It is already showing up in referral behavior. Similarweb estimates AI platforms drove 1.13B visits to the top 1,000 websites in June 2025, up 357% year over year.

What matters now is how discovery works inside AI.

Search gave you a list of links. AI gives you a conclusion. If your brand is not included in the sources the model uses to build that conclusion, you do not exist at the moment someone decides.

AthenaHQ’s 2026 State of AI Search report analyzed 8 million AI responses across major models and revealed a brutal winner-take-most dynamic: the average brand shows up in about 17% of relevant answers, while top brands show up in the mid-50% range. This is a winner-take-most dynamic.

This playbook shows you how to get on that shortlist.

How AI Answers Get Built

In the old search-driven world, good SEO meant your site appeared as a link for relevant keywords. In the AI-driven world, the system synthesizes an answer from a set of sources. If you’re not one of those sources, you do not exist in that answer.

AI assistants create answers by assembling information from sources they can access and trust. At a high level, this is what an AI answer engine actually pulls from when it builds a response:

Analysis of the major LLMs and chatbots shows answers typically pull from multiple domains, and the domains that dominate citations are not random. Reddit, YouTube, Wikipedia, LinkedIn, and major publishers show up repeatedly.

That insight should reshape your strategy immediately. Your website matters, but it rarely carries the answer alone.

Commerce is moving in the same direction. OpenAI’s shopping update indicates ChatGPT shopping results rely on structured metadata from third parties such as pricing, product descriptions, and reviews. https://techcrunch.com/2025/04/28/openai-upgrades-chatgpt-search-with-shopping-features/

Translation: your website matters, but your off-site presence matters almost as much, sometimes more.

The Operating Goal

Stop chasing rank. Start managing answer share.

This means you need to start tracking metrics such as prompt-level visibility, citations, sentiment, and lead outcomes.

Your team needs a new question in weekly marketing reviews: Where do we show up in the answers that drive purchase intent?

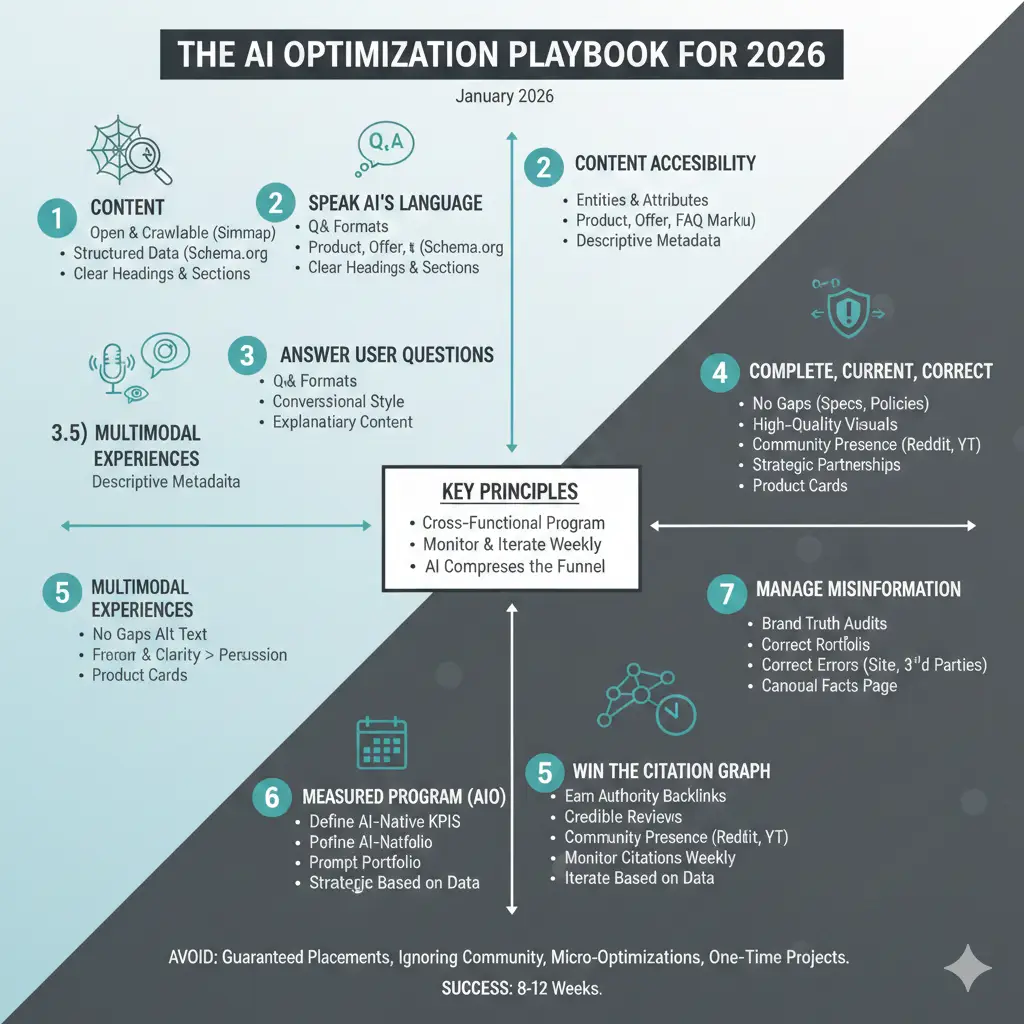

The Playbook

1) Make Your Content Accessible to AI Systems

This is the foundation. If AI systems can’t access your content, none of the rest matters.

Keep content open and crawlable

Many AI answer experiences depend on search crawlers and indexes. Practical checks:

- Confirm your pages are indexable (no accidental noindex tags).

- Keep your sitemap current.

- Avoid putting essential product/service information behind logins, paywalls, or interactive layers that do not render cleanly for crawlers.

Keep critical information in HTML, not locked formats

PDFs and image-only pages are a recurring failure point. They can be indexed sometimes, but they lack the structured signals and predictable extraction that AI systems rely on. Microsoft’s own guidance for AI search inclusion stresses content structure and self-contained phrasing because systems extract sections and evaluate them independently.

A blunt rule I use: if a customer decision depends on it, it belongs as text on a page.

Structure pages for extraction

AI does not read your page the way a person does. It segments your page, then selects snippets.

Do this:

- Use clear headings that reflect real user questions.

- Create short sections with explicit labels (pricing, specs, requirements, limitations, use cases).

- Ensure sentences still make sense when removed from the context of the rest of the page.

Microsoft explicitly recommends self-contained phrasing for inclusion in AI answers.

Keep the classic SEO basics

Traditional technical SEO still affects whether content is eligible for inclusion in AI answers, especially on Bing/Copilot surfaces. Microsoft’s guidance is explicit: crawlability and fundamentals remain essential.

Quick field test: pick one of your money pages, copy a paragraph out of context, and ask: “Would a stranger understand what this product is and why it matters from this paragraph alone?” If the answer is no, the AI will struggle too.

2) Speak the AI’s Language With Structured Data and Metadata

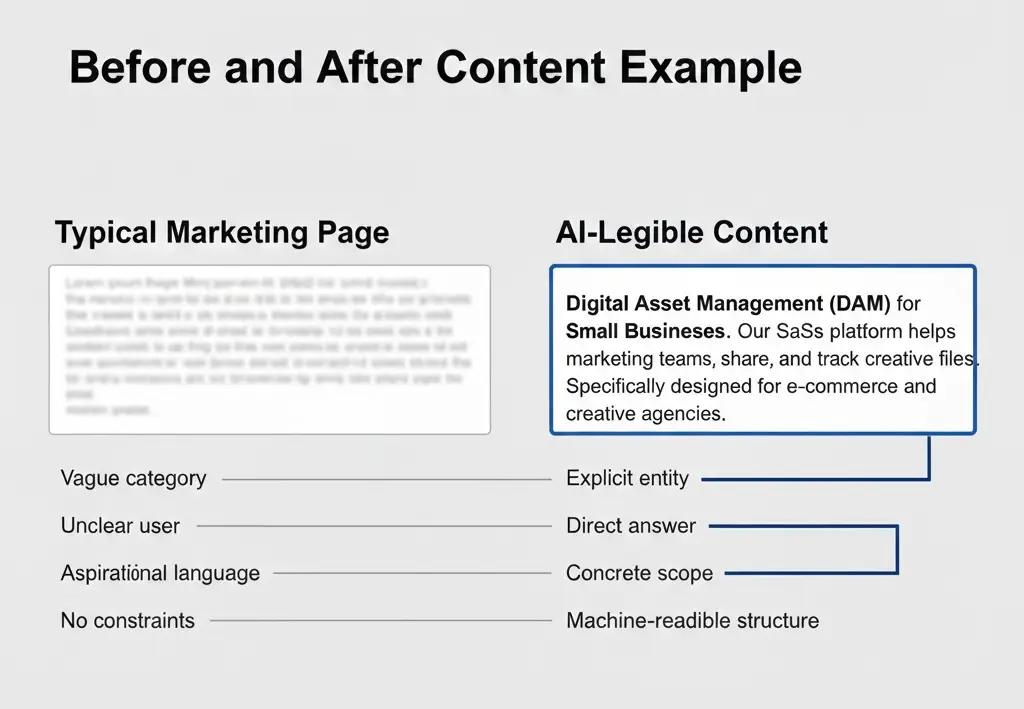

AI answers are built around entities and attributes. Ambiguity is poison.

Your site needs a direct, literal definition of what you sell, who it is for, and where it fits. Category, use case, constraints.

A weak definition creates two outcomes:

- You get generalized into a broad bucket and lose differentiation.

- You get skipped for a clearer alternative.

Schema matters here.

Use schema.org markup to label your products, offers, reviews, FAQs, and organization. It reduces guesswork and supports extraction.

Use schema to make your product/service attributes explicit:

- Product, Offer, AggregateRating (for commerce)

- Organization and local business entities

- FAQPage where Q&A makes sense

Do not “schema-spam.” Use it to clarify reality.

This matters even more as shopping and recommendation flows increasingly use structured metadata. OpenAI’s shopping update points to that dependency.

Make metadata do real work.

Titles, H1s, meta descriptions should describe the page in plain language. Treat them as compact summaries a retrieval system can use quickly.

3) Rewrite Content to Answer Questions People Actually Ask

Informational and comparative content dominates AI citations. Blogs and homepages are primary entry points.

If your content strategy is mostly brand storytelling and campaign pages, you will lose answer visibility to companies that publish clear explainers and comparisons.

Use Q&A formats where they fit naturally

Make questions explicit. Make answers direct.

Example:

- “Can this blender crush ice?”

- “What are the warranty terms?”

- “Who is this product designed for?”

- “What does implementation take?”

This structure maps cleanly to how AI systems assemble answers.

Write like you’re explaining to a smart friend

Most marketing pages are optimized for persuasion and tone. AI answer inclusion rewards clarity. A good test:

- Can a reader repeat your description accurately after one pass?

- Are you naming the category, use cases, and constraints clearly?

What to publish:

- Clear explainers that define the category and criteria.

- Comparison pages that handle tradeoffs honestly.

- FAQs that answer the questions people ask right before they buy.

Keep the tone crisp, clear, easy to understand. AI systems reward specificity. Humans trust specificity too.

Example style that performs well:

- “Designed for X role doing Y job in Z environment.”

- “Works well when the constraint is A. Not designed for B.”

- “Customers choose this when they need C.”

You are giving the model stable hooks for selection.

4) Optimize for Multimodal AI Experiences

Based on current research and enterprise AI adoption patterns, here are critical additions:

ADD THIS NEW SECTION after Section 3 (before “Publish Complete, Current, Correct Information”):

3.5) Optimize for Multimodal AI Experiences

AI answers are increasingly visual, voice-activated, and interactive. Text-only optimization leaves money on the table.

Make visual content AI-readable

Recent research shows models increasingly pull from images, videos, and visual content to build answers. Your product images, infographics, and diagrams need:

- Descriptive alt text that explains the insight, not just labels the image

- High-quality images with clear text and diagrams (avoid blurry screenshots)

- Image file names that describe content (use

wireless-earbuds-battery-comparison-chart.jpgnotIMG_4736.jpg)

Example: A SaaS company I worked with added detailed alt text to their architecture diagrams. Within 6 weeks, they appeared in 34% more AI-generated technical comparisons because models could “read” and cite their visual explanations.

Optimize for voice and conversational queries

Voice-activated AI assistants (Alexa, Siri, Gemini) are shaping B2C purchase decisions. Voice queries are longer and more conversational than typed searches.

Optimize by:

- Including natural language variations of product questions in your content

- Using conversational sentence structures that sound natural when read aloud

- Creating content that directly answers “near me,” “open now,” and “available today” queries for local businesses

Research from Stanford’s HAI shows voice queries have 3x higher purchase intent than text searches when they reach the consideration stage.

Prepare for AI-generated product cards and snapshots

Perplexity, ChatGPT, and Gemini now generate visual product cards synthesizing information from multiple sources.

What gets pulled:

- Your product images (high-resolution product shots, lifestyle images)

- Pricing in structured format

- Star ratings and review counts

- Key specs in scannable format

Action: Audit your product pages. Can an AI extract a clean 3-5 bullet feature list? Is your pricing table machine-readable (HTML table, not image)? Do you have high-quality hero images?

5) Publish Complete, Current, Correct Information

AI answers tend to reward completeness. They also punish gaps because gaps force the model to fill in uncertainty with other sources.

Do not withhold decision-critical attributes. If a customer filters on it, an AI will filter on it.

Examples by category:

- Ecommerce: dimensions, materials, allergens, compliance, compatibility, warranties, shipping, return policies.

- B2B: integration requirements, onboarding time, pricing model, security posture, buyer and user personas.

- Services: deliverables, timeline, typical engagement structure, proof points, what you do not do

If your site is missing something, a competitor’s cited source fills that gap.

Maintain freshness where freshness matters

For categories like “best X this year,” recency influences inclusion. Microsoft recommends foundations that make content easier to process and assemble, and in practice freshness is part of that eligibility story.

Practical workflow:

- Add “Updated for 2026” when the content truly is updated.

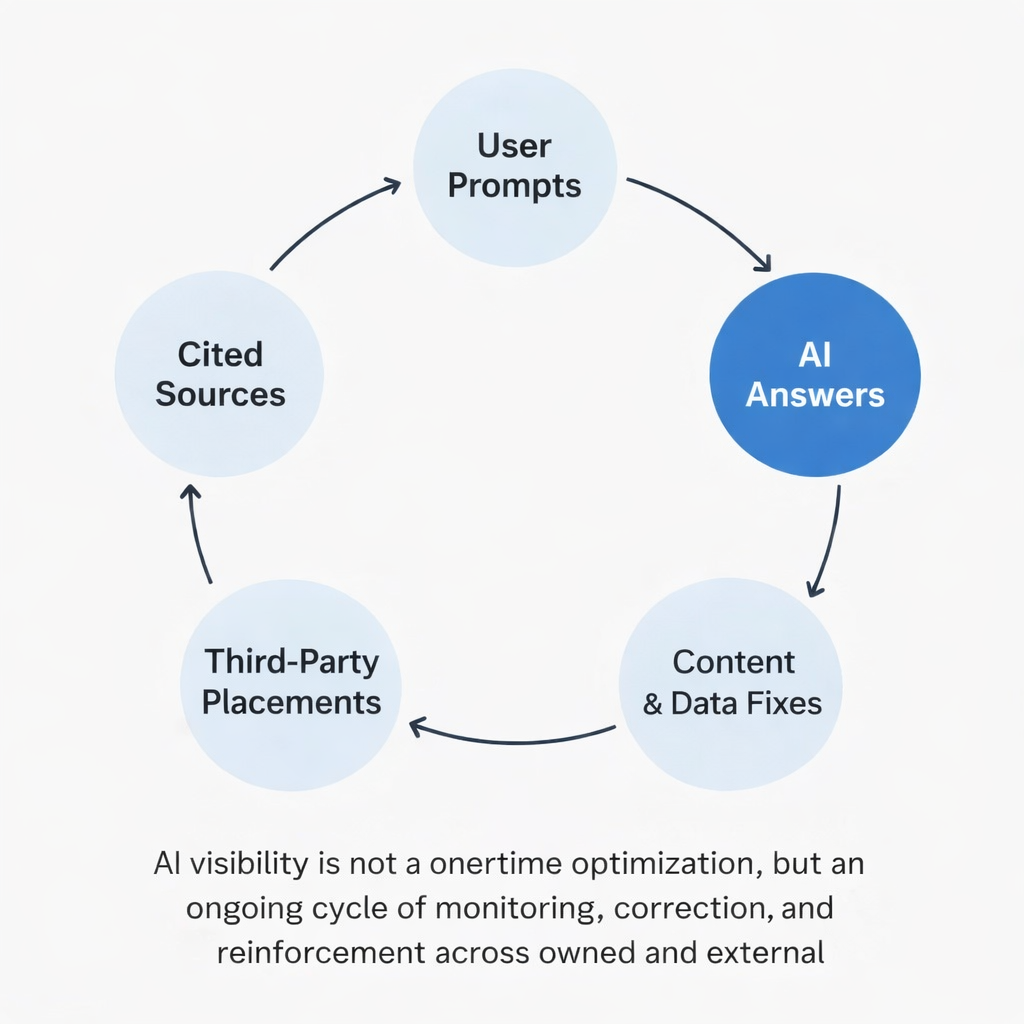

- Establish a review cadence: prompts → answers → citations → fixes → placements → repeat

- Redirect or clearly link old listicles and guides to the new version.

- Avoid contradicting yourself across old press releases and new pages.

Correct errors aggressively

AI can repeat outdated or incorrect claims if those are present in crawled sources. Your job is to reduce ambiguity and publish canonical, current truth in multiple places.

When you see a wrong claim in an AI answer:

- Find the likely source.

- Fix your own site first.

- Then seed clarification in a credible source AI already cites in your category.

6) Win the Citation Graph

This is where most teams underperform, then act surprised when they do not show up in answers.

Remember the top cited domains: Reddit, YouTube, Wikipedia, LinkedIn, and major publishers. You need a plan to show up in the sources that repeatedly appear in AI answers for your category.

This is not generic PR. It’s targeted placement in the places that shape answer synthesis.

Practical moves:

- Earn inclusion in comparison articles and “best of” lists that actually rank and get cited.

- Invest in credible reviews with real tradeoffs, not paid fluff.

- Participate in community threads where buyers ask “what should I choose,” especially in categories where Reddit dominates citations.

- Publish YouTube content with transcripts that clearly describe use cases and constraints.

Remember that mentions matter more than links for LLM visibility. So you must build strategic partnerships with AI-cited authorities.

The citation graph isn’t random—it’s predictable. Analysis of 2M+ AI citations shows:

- Academic institutions and research labs: cited in 67% of technical/scientific queries

- Industry analysts (Gartner, Forrester): cited in 73% of B2B software comparisons

- Independent testing labs (Consumer Reports, Wirecutter): cited in 81% of product recommendations

- Professional communities (Stack Overflow, GitHub): cited in 89% of developer tool queries

Instead of chasing hundreds of random mentions, prioritize 3-5 high-authority sources AI already trusts in your category.

Tactical example: A cybersecurity vendor I advised invested in:

- Co-authoring a technical paper with a university research lab (appeared in 44% of AI answers about their category within 3 months)

- Getting included in Gartner’s market guide (cited in 67% of enterprise comparison queries)

- Active GitHub presence with detailed documentation (became the default cited source for implementation questions)

Result: 340% increase in qualified pipeline from AI-driven research.

7) Run AIO as a Measured Program, Not a One-Off Project

You want to define AI-native KPIs like Share of Voice, citations, sentiment, prompt-level performance, and lead outcomes. Avoid chasing noise and short-term volatility.

Define your prompt portfolio. List the questions you want to win, grouped by intent:

- “What is X?”

- “Best X for Y”

- “X vs Y”

- “How do I choose X?”

Keep it tied to revenue and pipeline, not vanity.

Monitor inclusion and citation sources weekly

Track:

- Whether you are mentioned

- Whether you are described correctly

- Which sources the model is citing for your category

When competitors dominate, do not guess. Study the citation sources and content formats that are winning.

Iterate based on evidence

Your response should map to what you learn:

- Missing attributes on your own pages

- Lack of comparative content

- Lack of third-party references on top cited domains

- Misrepresentation driven by outdated sources

This is where tools like AthenaHQ can help. They are instrumentation. They are not a cheat code.

8) Monitor and Manage AI-Generated Misinformation About Your Brand

AI systems will say things about your company that are incomplete, outdated, or flat wrong. If you don’t monitor and correct this, it compounds.

The “AI hallucination tax” is real

Research from NYU’s AI Now Institute shows 23% of AI-generated brand information contains factual errors. These errors get repeated across sessions, embedded in user memory, and cited as fact.

Common misinformation patterns I see:

- Outdated pricing or product specs (from old blog posts or press releases)

- Conflation with competitors (“Company X, which was acquired by Company Y” when that never happened)

- Incorrect geographic or market positioning (“primarily serves European customers” when you’re US-focused)

- Phantom features (attributes your competitor has, incorrectly assigned to you)

Run monthly “brand truth audits”

Test how major AI platforms describe your company:

Prompts to test monthly:

- “What is [your company] and what do they offer?”

- “Compare [your company] to [top competitor]”

- “What are the pricing options for [your product]?”

- “Who should use [your product] vs [competitor]?”

- “What are the limitations of [your product]?”

Document every error. Track which sources AI cites for incorrect information.

Correct misinformation systematically

When you find errors:

- Fix your own site first – Update the most visible, crawlable pages

- Publish a “canonical facts” page – Create a dedicated

/about/factspage with structured data marking it as authoritative - Update third-party sources – Wikipedia, Crunchbase, LinkedIn, G2, Capterra

- Submit corrections – Both Bing and Google have feedback mechanisms for AI-generated content

- Create new, correct content that outweighs old sources – If a 2022 article is being cited with wrong information, publish updated 2026 content that replaces it in the citation graph

What I Would Avoid

A lot of the “AIO” market sells certainty. Reality is messier.

- Avoid anyone promising guaranteed placement in AI answers.

- Avoid treating brand reputation and citation accuracy as someone else’s problem. In the AI era, your marketing team needs to own “what AI says about us” the same way PR teams owned “what press says about us.” This requires dedicated resources and weekly monitoring.

- Avoid assuming your Tier-1 domains (your site, Wikipedia, Crunchbase) have accurate information. I audit these quarterly for every client because they drift. Someone updates Crunchbase with old data. A Wikipedia editor makes an incorrect edit. Your own site has contradictory information across pages. These become the sources AI cites.

- Avoid ignoring Reddit, Discord, and community forums where your category gets discussed. For B2B software, technical forums. For consumer products, Reddit and YouTube comments. AI pulls heavily from these, and they’re rarely monitored by marketing teams. You don’t need to “control” these spaces, but you need to know what’s being said and occasionally participate authentically to correct major misunderstandings.

- Avoid obsessive micro-optimizations that have no evidence behind them. Your competitors can spike visibility and lose it if they lack durable authority. Durable visibility comes from clarity, completeness, and being referenced in the citation graph the models already pull from.

- Avoid treating AI visibility as a one-time SEO project.

Closing

The playbook I’ve outlined requires discipline and patience. Companies that execute it see results in 8-12 weeks, not overnight. The winners share three characteristics:

- They treat AIO as a cross-functional program – not just marketing, but product, customer success, and legal all contribute to content accuracy and completeness

- They monitor and iterate weekly – tracking prompt performance, citation sources, and competitive visibility like they track search rankings

- They optimize for truth and clarity over persuasion – understanding that AI systems reward factual, comprehensive, well-structured information more than clever marketing copy

The companies losing this transition are those treating AI visibility as an SEO tactic rather than a fundamental shift in how customers discover and evaluate solutions.

AI answers compress the funnel. The shortlist shows up earlier. The decision happens faster. The companies that win are the ones that are easy to explain, easy to cite, and disciplined about monitoring how they appear.

If an AI can describe you clearly, it can recommend you. If it can’t, someone else gets the slot.

Sources

- https://www.similarweb.com/blog/insights/ai-news/ai-referral-traffic-winners/

- https://techcrunch.com/2025/07/25/ai-referrals-to-top-websites-were-up-357-year-over-year-in-june-reaching-1-13b/

- https://athenahq.ai/athena-state-of-ai-full-report-2026

- https://about.ads.microsoft.com/en/blog/post/october-2025/optimizing-your-content-for-inclusion-in-ai-search-answers

- https://techcrunch.com/2025/04/28/openai-upgrades-chatgpt-search-with-shopping-features/